Understanding Scopus & SciVal & the THE World University Rankings

What data do university rankings use?

There are many global rankings organizations. These include magazines, newspapers, websites, higher education institutions and governments. Some rankings organizations specialize in international rankings, others in national or regional rankings, and a few do both. For an overview of seven major rankings organizations, download the quick guide.

Ranking methodologies rely on data inputs from a range of external resources. These resources often include university and researcher data, relevant data on human resources, student administration, finances, and data from reputation surveys — each varying based on a rankings' niche and focus. In this guide, we focus on the bibliometrics used by Times Higher Education (THE) World University Rankings (WUR). For a broader look at university rankings, please visit our Guide to University Rankings.

Bibliometric datasets

One dataset most rankers use as part of their methodologies is bibliometric data. This data is associated with the documents or publications that researchers publish to share their findings. You can glean several data points and insights from bibliometric datasets, some of which are detailed below:

Number of citations received by publications from researchers at an institution.

Number of publications produced by researchers at an institution.

Research collaboration profiles, particularly international collaboration, of researchers and institutions.

Researchers’ institutional affiliation.

By using bibliometric datasets from a curated and authoritative source, rankers get a quantitative, consistent and comparable look at each university's research output to make it easier to compare them.

What challenges do universities face related to the bibliometric datasets used in rankings?

Understanding and obtaining the actual bibliometric data used to calculate the rankings is difficult. Because of this, if you are planning a rankings strategy for your institution, you may find yourself creating proxies based on your best estimates, limiting the strategic value.

Two ways to help address this challenge are:

Understand the methodology behind the ranking and pay attention to changes annually.

Remove the need for manual curation and development of proxy indicators.

In this guide, we first discuss the THE WUR methodology and further explain and bring transparency to the bibliometrics underpinning the THE World University Rankings. Then, we will discuss ways to overcome the need for proxies and manual curation.

How are the Times Higher Education (THE) World University Rankings (WUR) calculated?

THE introduced a new methodology — WUR 3.0 — with the 2024 Rankings released on September 27, 2023. Included in the updates was the introduction of a wider range of bibliometric measures, improved international metrics and an expanded role for knowledge transfer.

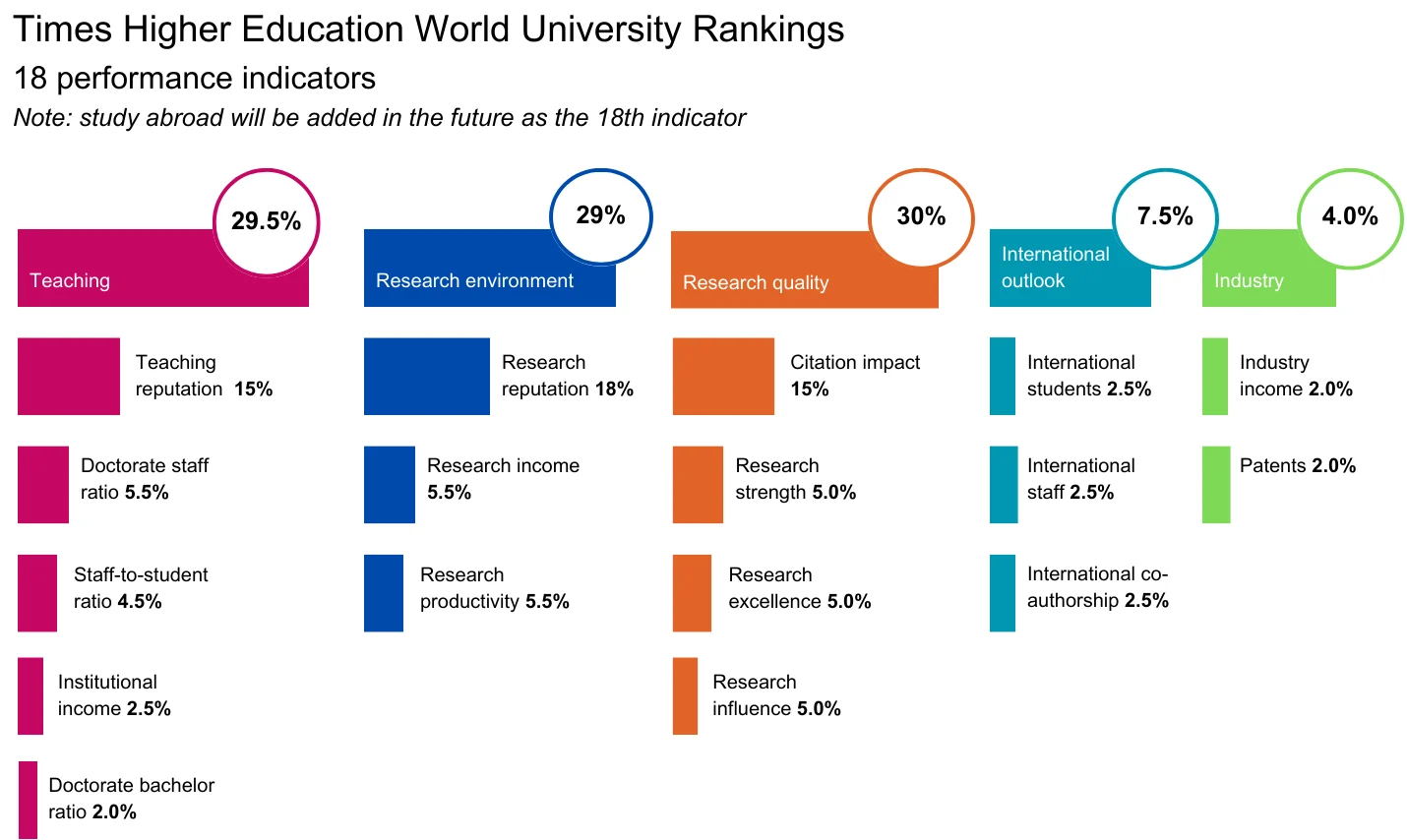

THE’s methodology groups 18 performance indicators into five major areas:

Teaching (the learning environment) 29.5%

Research Environment (volume, income and reputation) 29%

Research quality (citation impact, research strength, research excellence and research influence) 30%

International outlook (staff, students and research) 7.5 %

Industry (income and patents) 4.0 %

Data accessed from the THE website on September 28, 2023.

The weight distribution applied across the 18 performance indicators that THE uses in its WUR methodology (note: the study abroad indicator will be added in the future as the 18th). Adapted from THE website. Find more details below.

How does Elsevier data contribute to the THE WUR?

Elsevier supports the ranking process by providing bibliometric datasets on an institution’s research. The methodology ultimately used in the WUR is determined by Times Higher Education. Elsevier partners with ranking organizations and research institutions to provide objective and authoritative bibliometric datasets in addition to bibliometric and data science expertise.

Elsevier bibliometric datasets and metrics contribute to 40% of THE WUR methodology and overall ranking score. This includes new metrics introduced with the WUR 2024: Research Strength, Research Excellence, Research Influence and the Cited by Patents metrics.

As stated by THE,

Elsevier’s bibliometrics help to measure how well each university is contributing to the sum of human knowledge: they tell us whose research has stood out, has been picked up and built on by other scholars and, most importantly, has been shared around the global scholarly community to expand the boundaries of human understanding, irrespective of discipline. (THE rankings methodology guide, accessed September 28, 2023)

Why does Times Higher Education use Scopus® Data?

Scopus® is a source of research publication and bibliometric data that several rankers rely on. In 2014, THE adopted Scopus as its source for research publication and bibliometric data. At that time, Trevor Barratt, former managing director of THE, said:

Research publication data for the rankings will in the future be drawn from Elsevier’s Scopus database. The new data source will allow us to analyze a deeper range of research activity from a wider range of institutions than at present, including those institutions from emerging economies that account for a growing portion of the world’s research output and which have shown a great hunger for THE’s trusted global performance metrics.

Times Higher Education (THE) uses Scopus Data in several rankings, including their World University Rankings, Impact Rankings and the Young Universities Rankings.

A snapshot of the depth and breadth of Elsevier's bibliometric data:

For the WUR 2024, Elsevier examined more than 134 million citations to 16.5 million journal articles, article reviews, conference proceedings, books and book chapters over a five-year period. The data includes over 27,950 active peer-reviewed journals indexed by Scopus and all indexed publications between 2018 and 2022. Citations to these publications made in the six years from 2018 to 2023 are also collected.

A closer look to understand where Elsevier bibliometrics contribute.

Citation impact (15% of total score)

Elsevier has calculated the Citation Impact score per institution for Times Higher Education since 2015. The score is derived from the Field Weighted Citation Impact (FWCI) metric.

FWCI is a normalized citation metric that considers different citation practices across disciplines and publication types. It is a mean and is calculated by taking the actual number of citations received by each publication produced by an entity, which, in the case of rankings, is a university compared with the average number of citations received by all other similar publications. ‘Similar publications’ are publications in the Scopus database that have the same publication year, type and discipline as defined by the Scopus journal classification system (ASJC).

FWCI, therefore, normalizes for the subject area or discipline, year of publication and publication type (see Glossary). Within the THE WUR, publications with more than 1,000 authors have a fractional counting approach. An FWCI score of 1.00 indicates the publications produced by the entity of interest, in this case, a university, have been cited on par with the global average for similar publications.

It is important to note that the THE WUR applies a 5-year citation window to the FWCI calculation for their methodology, whereas the standard FWCI methodology, used in products such as SciVal and Scopus, uses a 4-year citation window. Additionally, for territories or regions that cannot be country-normalized, THE's data science team applies a series of further normalizations to determine the final Citation Impact score.

Research Strength (5% of total score)

New to the WUR 2024, the Research Strength measurements adds an additional view of research quality that reduces the effect of extremely highly cited publications. It is based on the 75th percentile of the 5-year FWCI and contributes 5.0% of the final rankings score.

Research Excellence (5% of total score)

Another new addition to THE’s 3.0 methodology, Research Excellence aims to recognize the institution’s contribution to the best research per subject. The calculation is derived from the number of publications a ranked university has in the top 10% of all publications by FWCI (5 year) and contributes to 5.0% of the final rankings score. It is normalized by year, subject and staff numbers at the university.

Research Influence (5% of total score)

A third new indicator added to the Research Quality category is Research Influence, making up 5% of the total score. It suggests “a broader look at excellence” — the influence a publication is having by being recognized, through citation, in other influential publications (see THE website, accessed on September 28, 2023). The indicator looks at the network of citations around publications to understand the impact or “importance” they have more broadly through being cited by other ‘important’ publications, for example, which are also highly cited. It uses an iterative method, taking into account the citation patterns to determine the “importance” of publications while also considering citation pattern differences across subject areas.

For both the Research Excellence and the Research Influence indicators, Elsevier provides the raw data for THE to use in their calculations.

Research Productivity (5.5% of total score)

THE's Research Productivity indicator provides insight into researchers’ publishing activity through their ability to publish in quality peer-reviewed titles indexed in Elsevier’s Scopus database. The indicator is scaled to account for institution size and normalized for subject/discipline.

International Co-authorship (2.5% of total score)

International Co-authorship measures the proportion of a university's publications with at least one international co-author. This metric is based on Elsevier’s Scopus Data.

Patents (2.0% of total score)

Patents, another new indicator introduced with the WUR 2024, recognizes how an institution supports its national economy through technology transfer. It is calculated based on the count of patents citing an entity’s published research. The data is provided by Elsevier and relates to patents sourced from the World Intellectual Property Organisation, the European Patent Office, and the patent offices of the US, the UK and Japan, published between 2018 and 2022 for THE WUR 2024.

For a more in-depth understanding of THE’s methodology and calculations, please refer to their website and methodology guide here.

How can I be included in the Times Higher Education world rankings?

Times Higher Education does not rank all universities in their World University Rankings. They use three key criteria to determine which universities to rank:

Publish. You are required to publish a sufficient number of academic papers over a five-year period. Currently, the threshold is more than 1,000.

Teach undergraduates.

Wide Focus. Work across a range of subjects.

Other types of rankings have different inclusion criteria.

How do Scopus & SciVal work together to contribute to THE WUR?

Elsevier offers research information and analytics solutions like Scopus and SciVal to assist universities in evaluating their research performance — both in general and as it relates to their performance in university rankings. You can use these solutions to analyze the actual bibliometric datasets used by, for example THE and QS in their World University Rankings methodology, or to help analyze your publication output, citation impact, collaboration patterns, and other factors that can influence your ranking outcomes.

How are the two related? Scopus is the authoritative and trusted bibliometric database the major rankers choose to provide the bibliometric datasets they use in their methodologies. SciVal adds analytical power and flexibility to the same bibliometric data, offering a range of metrics, such as the 5-year FWCI, analyses and reports to investigate and understand the drivers behind the bibliometrics used in rankings.

What is Scopus Data?

It is crucial to understand Scopus’s role in the rankings to ensure that rankers such as THE accurately reflect your institution.

Scopus is an authoritative, source-neutral abstract and citation database curated by independent subject matter experts.

There are three things you need to know about Scopus:

What is in the database, and which parts inform THE World University Rankings?

What is an institution or organization profile, and how can I view mine?

What are author profiles?

The Scopus Database

Scopus uniquely combines a comprehensive, curated abstract and citation database with enriched data and linked scholarly content. It is a structured source of publication items. The structure includes organizing the data generated in relationships, e.g., authors, citations, institutions, etc.

Scopus contains millions of data points, 7000+ publishers, platforms, and sources. In addition to all this data, Scopus uses artificial intelligence to connect the data to the correct authors and institutions.

Scopus is organized in a structured way so you (and Times Higher Education) can use it to find insights about your research. Further, by using the API, data can be extracted and used in other institutional systems.

Author Profiles, Institution Profiles and Affiliation Hierarchy

When discussing rankings, it is important to discuss Scopus Author and Institution Profiles and the affiliation hierarchy. When calculating their ranking reports, THE uses these to attribute the correct research to your institution.

The Institution Profile Wizard (IPW) allows institutions to modify their institution profiles and correct their organizational hierarchies to ensure the set of institution profiles are grouped together and displayed on Scopus are accurate. This ensures ranking organizations see a clear picture of institutions' research activity.

Authors can also check and validate their Scopus Author Profiles through the free Author Profile Wizard (APW). Although Scopus has a high level of accuracy and recall for Author Profiles, enabling authors themselves to check and validate their profiles further improves both data accuracy and data confidence.

Although authors can check their profiles through the free APW, for institutions with high Scopus adoption, researchers are naturally more active in accessing and maintaining their author profile.

How can I better understand my university’s research & ranking with SciVal?

SciVal is built upon the foundation of trusted, globally sourced data from Scopus, bolstered by supplementary resources like policy and patent data. It employs advanced data science and artificial intelligence methodologies to empower you with analytical capabilities for gaining profound insights into global research activities.

In other words, SciVal brings to life insights into your institution’s research activities against peer benchmarks and emerging research trends to enhance strategy and decision-making. With uniquely adaptable analyses and meaningful insights, you can evaluate and showcase expertise and impact at the researcher, department, faculty and university-level and support future funding success.

How can SciVal help you with your rankings analyses and strategies, and remove the need for proxies?

Rather than relying on manual curation and the development of proxy indicators, SciVal’s rankings analysis enables you investigate, understand and generate insights based on the actual bibliometric datasets used in the THE World University Rankings (THE WUR), as well as the bibliometric datasets used in the QS World University Rankings (QS WUR) and the THE Impact Rankings.

For THE WUR, this means you can analyze, understand and gain insights from the actual bibliometric dataset contributing to 40% of the overall ranking score. In the image below, you see this in action.

Analyze, understand and generate insights based on the actual FWCI and citation scores

Here you see the Rankings analysis for a university, including a detailed view to their performance across each bibliometric indicator used in the THE WUR 2024. The THE WUR’s for 2021 to 2023 are also available, enabling you to analyze the drivers behind the scores, including the new metrics introduced in 2024. Extensive metric guidance also helps you learn about the previous and new ranking methodologies.

The insights you gather from the ranking analysis feature is designed to help you get a deeper understanding of your position, the drivers underlying the rankings, and to inform your ranking strategy.

Watch the video below for an example of how SciVal and Scopus help bring clarity to university rankings.

Demystify University Rankings: A closer look

Watch now

|

Demystify University Rankings: A closer look

How can I better understand our international and industry collaboration partners through the lens of rankings?

International and cross-sector collaboration are important elements, not only in ranking methodologies, but across your research strategy and department-level plans; therefore, evaluating your collaborations is invaluable. With SciVal, you can analyze your current and potential collaboration partners based on a wide array of metrics including their overall rank across four different Rankings: THE World University Rankings, THE Impact Rankings, QS Rankings and Shanghai Rankings.

How can I understand, monitor and benchmark our institution against our peers?

A key part of your Rankings analysis is also to understand the performance of other peer institutions. For example, you might be interested in analyzing how you compare to those universities positioned above and below you in international collaboration while also tracking the THE Citation Score. Here you may discover key insights that help inform plans moving forward. While it is easy to see in ranking tables which institutions are ranked similarly to you, having the flexibility to define the institutions you want to benchmark against, and then to investigate the bibliometric dataset driving key aspects of their rank, helps you develop a clearer picture.

This is why having the bibliometrics used by rankings providers in SciVal is additionally helpful. You can select THE World University Rankings and build your own heatmap, visualizing trends or creating charts to analyze a selected set of institutions across all the bibliometric drivers.

Although SciVal cannot predict where you will be placed on any future ranking, especially as there are many factors involved, it can help you better address questions related to the bibliometric datasets and indicators that inform current rankings. The insights gained can be fed back across your strategy and, or development plans.

Overall, no matter your position and role at a university, if you need to understand university rankings there are a variety ways SciVal can support you, such as:

Creating management-level reports based on deep-dives into the bibliometric-based data used in major rankings.

Creating benchmarking reports against peers across the bibliometrics used in rankings.

Use analyses to inform development of faculty and department-level strategic plans and publication strategies.

Summary

In conclusion, Scopus is an authoritative and comprehensive database, trusted by major ranking organizations, for accurate and connected bibliometric datasets. It is also at the core of SciVal. By combining the analytical power and flexibility of SciVal, together with the breadth and depth of connected bibliometric data from Scopus, you gain a digital consultant that not only helps you answer questions related to rankings, but also helps uncover insights you can apply across your research programs and strategic planning.

Do you want to learn even more about Scopus and SciVal?