Confidence in Research brings together the voices, insights, and ideas shaping the future of science

귀하의 브라우저가 완벽하게 지원되지 않습니다. 옵션이 있는 경우 최신 버전으로 업그레이드하거나 Mozilla Firefox, Microsoft Edge, Google Chrome 또는 Safari 14 이상을 사용하세요. 가능하지 않거나 지원이 필요한 경우 피드백을 보내주세요.

이 새로운 경험에 대한 귀하의 의견에 감사드립니다.의견을 말씀해 주세요

2023년 10월 17일

저자: Linda Willems

Researcher Basil Mahfouz hopes the answer will help him design a powerful tool for policymakers

How do governments select the research evidence they use to guide their policymaking — particularly when responding to a global crisis?

The obvious answer is that they draw their inspiration from current or highly cited publications. However, a new study by UCL (University College London) researcher Basil Mahfouz suggests the process is not so clear cut.

Basil is a third-year PhD student in UCL’s Department of Science, Technology, Engineering and Public Policy (STEaPP). His PhD project is supported by Elsevier’s International Center for the Study of Research (ICSR) and explores how research impacts society.

As part of that work, Basil recently conducted a case study with PhD supervisors Prof Sir Geoff Mulgan and Prof Licia Capra tracking the impact of research on education policy during COVID-19. As Basil explained:

Basil Mahfouz

The pandemic resulted in measures, such as school closures, that disrupted learning for more than 1.5 billion students. And education policymakers worldwide discussed these measures in thousands of policy documents, most of which referenced academic research.

He added:

There were 450,000 scholarly papers published about COVID-19 between March 2020 and December 2022. To put that in context, that’s almost as many as all the research papers on climate change ever published. So, we were interested to see how well education policymakers took advantage of that vast amount of literature.

What they discovered surprised them.

Basil and his co-authors focused on polices issued between March 2020 and December 2022, containing recommendations or comments on COVID-19 measures for educational institutions. They found that more than 75% of the peer-reviewed papers cited in these policies were published prior to 2020. Using natural language processing, they also established that for 48% of those older research papers, there were newer articles available with comparable abstracts. Basil pointed out: “The average was 70 new papers for every old paper, although it’s worth noting that some papers had a lot while others had very few.”

While only 20% of the education research cited in the policies was published later than 2020, that figure rose to more than 80% for medical research. Basil said: “The medical research findings aren’t surprising given that there was no research on COVID prior to the pandemic. However, that’s a very low percentage for education.”

According to Basil, in the case of education research, this is partly attributable to the fact that a high proportion of it is localized. He added: “We also carried out a comparative analysis of research usage between the USA, the UK, the European Union and IGOs (intergovernmental organizations involving two or more nations). We found that only 0.62% of citations were shared across all four jurisdictions. Scientific articles referenced by IGOs were the most ubiquitous, accounting for over 10% percent of citations across all other policies.”

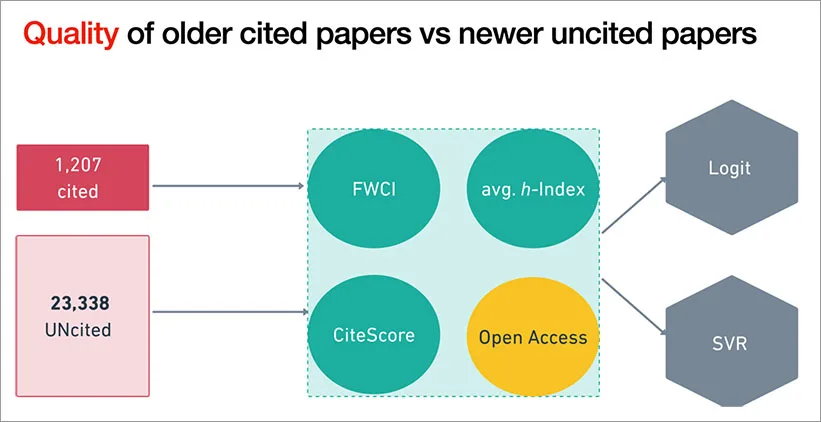

Basil divided the papers into two groups: those that were cited in policy and those that were found to be relevant to the policies but not cited. For each paper, he checked proxies of research excellence such as field-weighted citation impact (FWCI), the average h-index for all co-authors, and the CiteScore of the journal in which it was published.

The average h-index of authors for papers published during 2021 and 2022 which were found to be relevant to the policies but were not cited (blue), and papers published prior to 2020 that were cited in the policies (orange).

“We found that, on average, the older papers performed better across all indicators,” he said. “However, overall, the link between the indicators and policy citations was relatively weak.”

According to Basil, this may be due to the fact that during the pandemic, under-pressure policymakers reverted to “research they were already familiar with or articles published by people they trusted.”

The study authors also checked for a link between research accessibility and policy citations. “Interestingly, we found that most papers cited in policies weren’t open access,” Basil noted. “I think there are multiple reasons for that; for example, open access papers were not as prevalent 10 years ago as they are today.”

For the project, Basil worked in collaboration with Elsevier’s ICSR Lab— a cloud-based computational platform that researchers can use to analyze large structured datasets, including those that power Elsevier solutions such as Scopus and PlumX Metrics — and a partnership with Overton, a global database of policy documents and their citations.

Overton supplied the authors with a list of 2,452 polices that fit their criteria. The policies spanned 49 countries in total, although the majority (86%) were from the US, the UK and the EU.

Collectively, the policies included circa 24,000 scholarly citations, which after deduplication resolved to around 12,000 unique papers. Basil used the DOIs (digital object identifiers) of these papers to match them to datasets held in the ICSR Lab, which gave him the metadata for 8,818 of the policy-cited articles.

After establishing that many of the policies cited older papers, Basil and his co-authors looked to see whether there were newer papers (post-2020) on the same topic that policymakers could have used.

To do this, they turned to SciVal Topic Prominence. Basil explained: “SciVal assigns each paper to one of 96,000 different topics; these are collections of publications with a common intellectual interest. We matched 2,589 of the cited papers to a topic. And then we performed natural language processing on their abstracts to discover whether there were other, similar papers on that topic that were published during 2021 and 2022. We discovered that for 48% of these older papers, there were newer articles on the same topic available.”

According to Basil, the involvement of the ICSR Lab was pivotal to the project: “Working with the Lab offers you flexibility — you can easily shift or adapt your project as you make fresh discoveries. And while other datasets might contain higher overall counts of papers, they also come with limitations; for example, you don’t only get peer-reviewed papers, and it’s not easy to identify what is what.

“With the ICSR Lab, I know that all the papers I’m analyzing were published in peer-reviewed journals with good processes because they’ve passed Scopus’ journal selection criteria. And alongside the standard publication metadata, I get a lot of additional information. This allows me to slice and dice the data in different ways and opens up avenues of more nuanced analysis.”

He added: “The platform isn’t just about data — it also provides computing power. And that was important for us given the huge datasets we were analyzing.”

Elsevier’s Dr Andrew Plume, President of the ICSR and a supervisor for Basil’s study, said: “The project and its first tranche of insights into the dynamics of public policymaking in times of crisis have been illuminating to us here in the center.” He added:

This work demonstrates the value of connecting the Overton policy database with Scopus publication data to ask questions not previously amenable to analysis at scale. With a rising desire across academia and government to understand and evaluate the contribution of research to society, this ability to connect the pathways from research to societal impact will become ever more critical.

Andrew Plume, PhD

Elsevier의 President, International Centre for the Study of Research (ISCR)

Basil aims to continue refining the model he’s built for this case study: for example, by adding a comparative analysis between countries and continuing to optimize the parameters. He also plans to run new case studies on key policy topics such as climate change. He explained:

The goal is to create a pipeline, a model that you can slot different topics into for analysis. I’d like to turn this into a tool that could potentially support two use cases.

The first of these is providing governments with data for funding decisions.

“Each year, government agencies globally invest around $600 billion into research. That’s public money meant to help improve the research ecosystem and also benefit the public,” Basil said. “But what are they funding? How are decisions made? A tool like this would provide them with data to guide decisions about where to invest for more evidence-based public policy.”

Basil also sees an opportunity to use it as a diagnostic tool to track exactly how research is making its way into policy. “You can benchmark policies, funders and institutions. You can help policymakers understand how well they are absorbing knowledge and where they need to improve. This will be fairer for researchers. At the moment, there is an assumption that if researcher A has more policy citations than researcher B, the former’s research is probably better. But perhaps researcher A is in a country where policymakers are better at finding and absorbing research. We could account for that and provide more context-sensitive indicators.”